My name is Tunyu Zhang (张焞宇), a first-year Ph.D. student in the Department of Computer Science at Rutgers University, advised by Prof.DIMITRIS N. METAXAS. Previously, I obtained my bachelor degree at the University of Science and Technology of China (USTC) in 2025.

My research interests include large language models reasoning, diffusion language models, uncertainty estimation, and efficient training of generative models.

🔥 News

- 2026.01: 🎉🎉 Our paper TokUR was accepted to ICLR 2026

- 2025.09: 🎉🎉 Our paper TokUR on Bayesian LLM reasoning was accepted to the NeurIPS 2025 Workshop FoRLM!

- 2025.08: 🎉🎉 I will join Professor Dimitris Metaxas’s group to pursue my PhD degree at Rutgers.

📝 Publications

where “*” denotes equal contribution

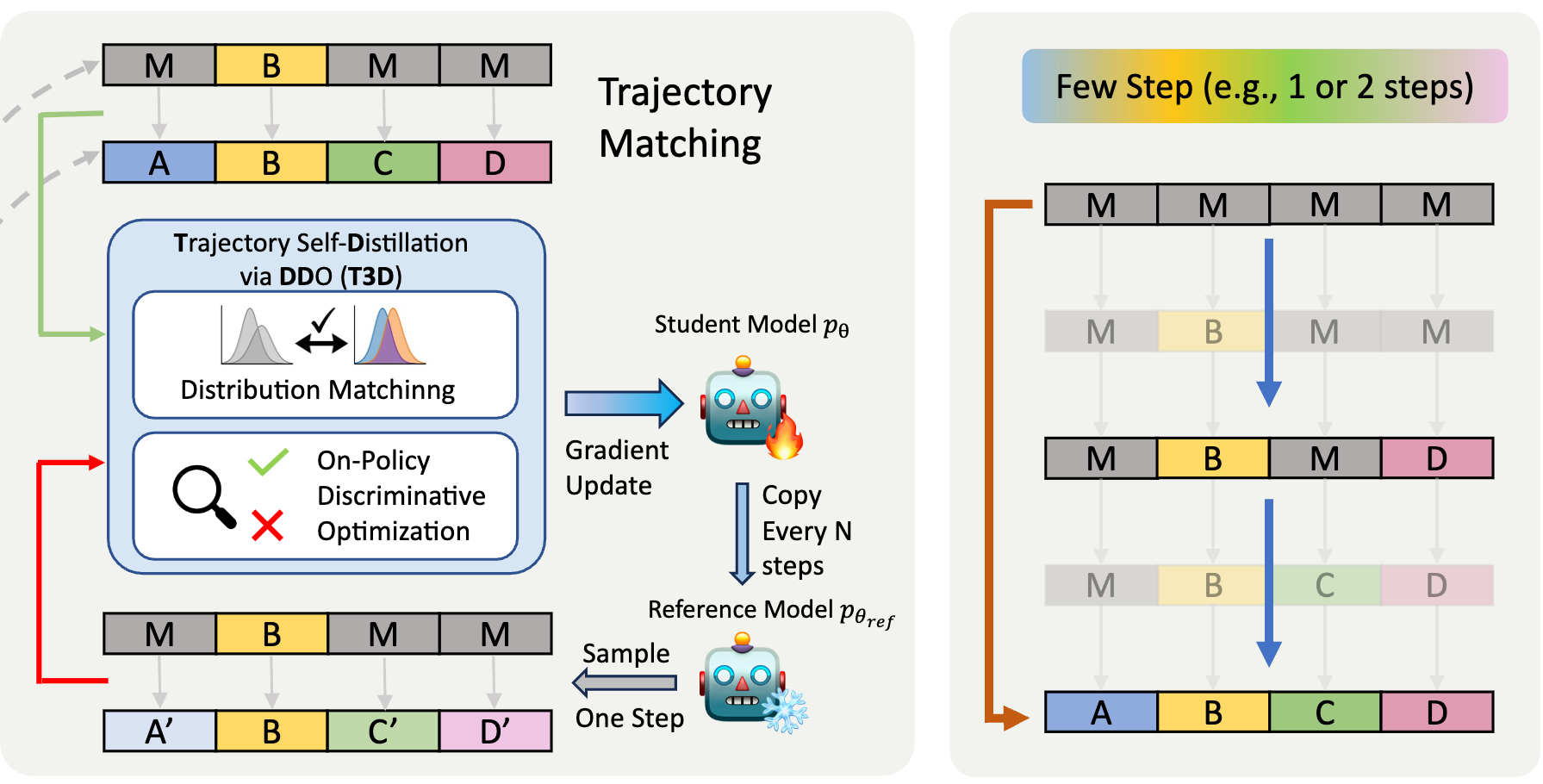

T3D: Trajectory Self-Distillation via Direct Discriminative Optimization for Efficient Diffusion Language Models

Tunyu Zhang*, Xinxi Zhang*, Ligong Han, Haizhou Shi, Xiaoxiao He, Zhuowei Li, Hao Wang, Kai Xu, Akash Srivastava, Hao Wang, Vladimir Pavlovic, Dimitris Metaxas

Paper | Code | Slides

- T3D is a training framework for efficient diffusion language models via trajectory self-distillation.

- T3D uses Direct Discriminative Optimization (DDO) to replace mode-covering objectives with a mode-seeking training signal.

- The framework enables aggressive few-step generation while preserving full-step diffusion capabilities and reasoning performance.

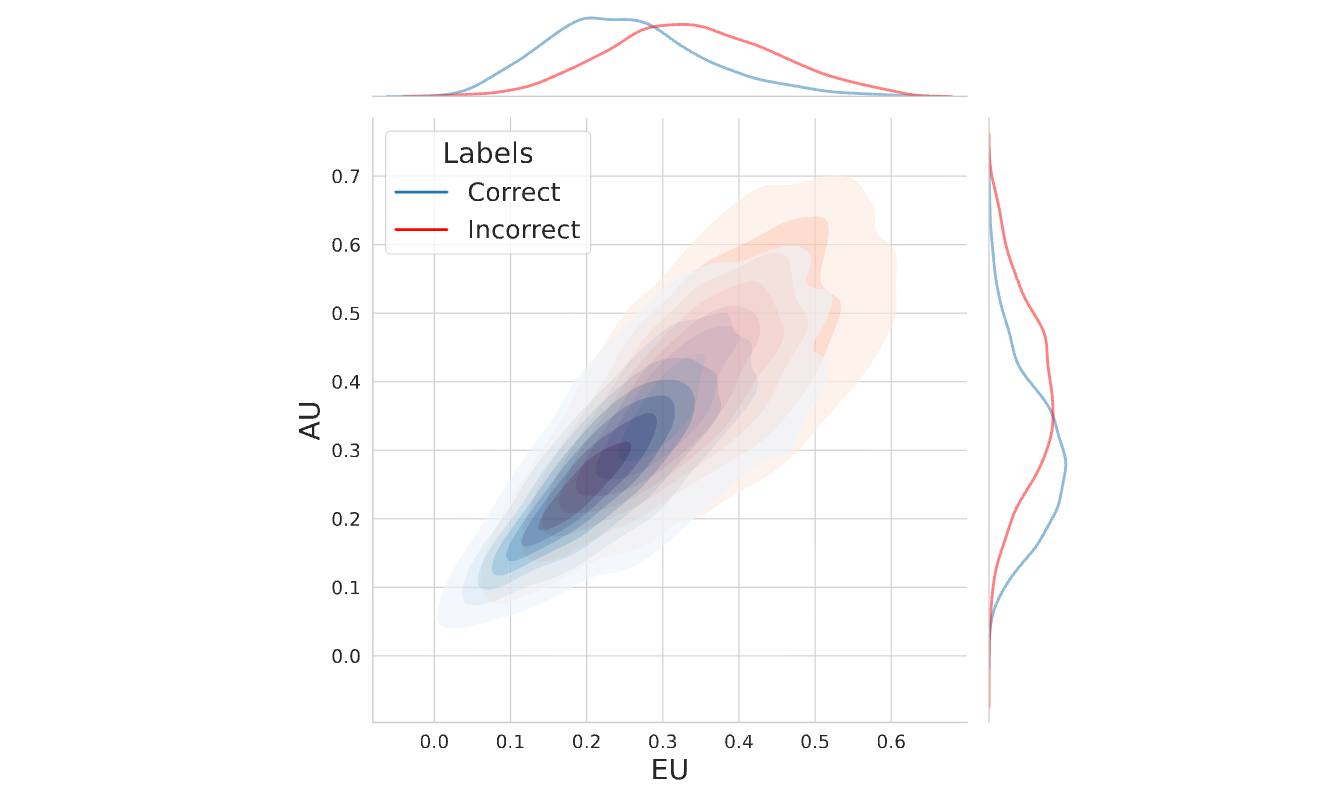

TokUR: Token-Level Uncertainty Estimation for Large Language Model Reasoning

Tunyu Zhang*, Haizhou Shi*, Yibin Wang, Hengyi Wang, Xiaoxiao He, Zhuowei Li, Haoxian Chen, Ligong Han, Kai Xu, Huan Zhang, Dimitris Metaxas, Hao Wang

Paper

- We propose TokUR, a framework for token-level uncertainty estimation tailored for LLM reasoning.

- TokUR introduces a low-rank stochastic perturbation mechanism to approximate predictive distributions efficiently.

- The framework enables more reliable multi-step reasoning, and provides uncertainty-aware signals for downstream tasks.

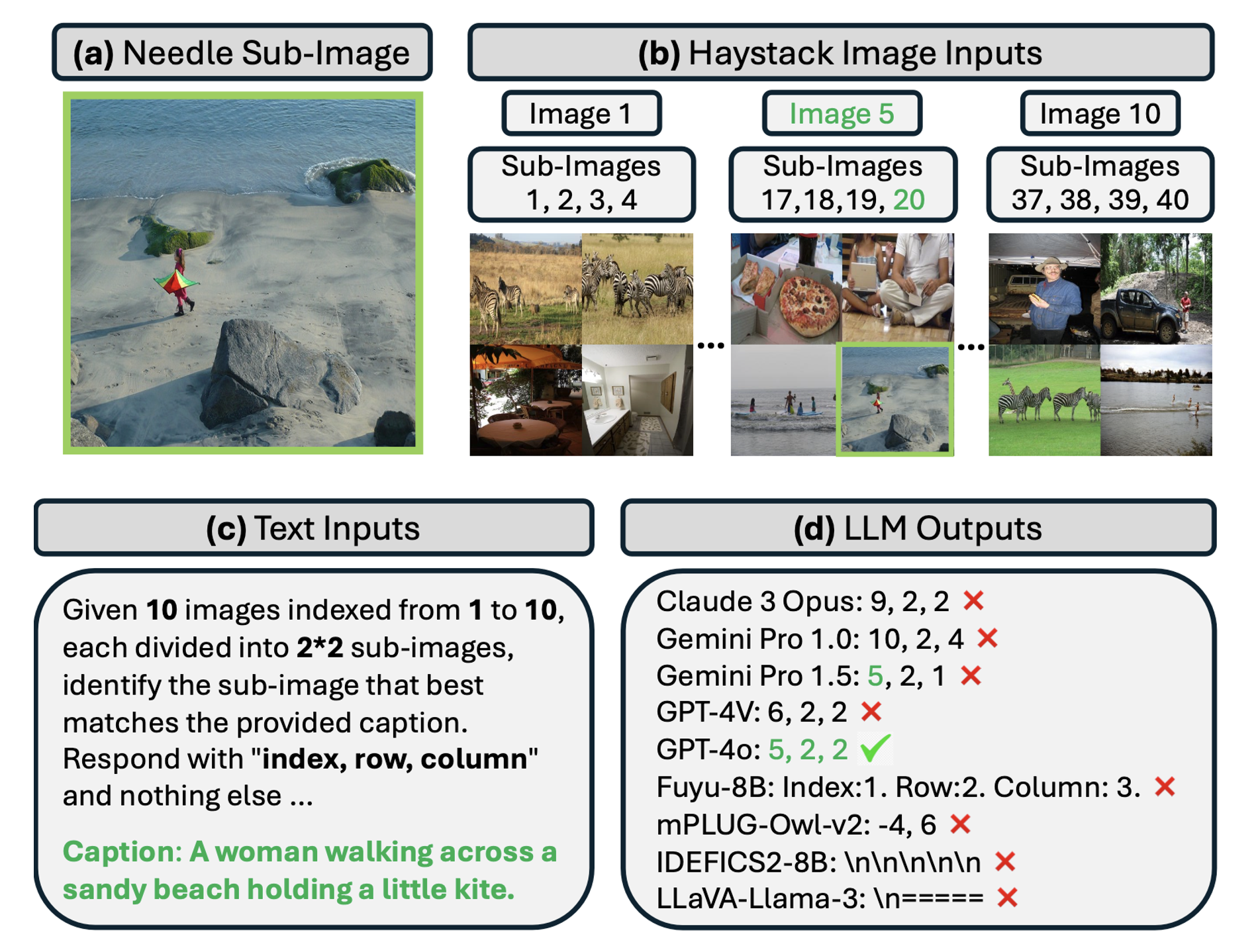

Multimodal needle in a haystack: Benchmarking long-context capability of multimodal large language models

Hengyi Wang, Haizhou Shi, Shiwei Tan, Weiyi Qin, Wenyuan Wang, Tunyu Zhang, Akshay Nambi, Tanuja Ganu, Hao Wang

- MMNeedle provides a systematic evaluation framework for long-context multimodal understanding.

- It enables controlled benchmarking of retrieval and reasoning over large visual contexts, and reveals robustness challenges in current multimodal LLMs.

Complex Networks

- Study of nonequilibrium phase transitions mechanisms in exclusive network and node model of heterogeneous assignment based on real experimental data of KIF3AC and KIF3CC motors, EPJP 2022

- Physical mechanisms of exit dynamics in microchannels of nonequilibrium transport systems, IJMP 2024

🎖 Honors and Awards

- 2025.06 Outstanding Undergraduate Thesis Award, University of Science and Technology of China

- 2022.12 Second Prize, Asia and Pacific Mathematical Contest in Modeling (APMCM)

- 2022.05 Outstanding Student Scholarship (Gold Award), University of Science and Technology of China

📖 Educations

- 2021.09 - 2025.06, Univeristy of Science and Technology of China, Hefei.

💬 Invited Talks

- 2026.02, Few-Step Diffusion Language Models (Red Hat AI Innovation Team, Random Sample Talk) Slides.

💻 Internships

- 2024.06 - 2025.08, Research Assistant at Rutgers University

- 2023.06 - 2024.05, Research Assistant at University of Hong Kong (HKU)